the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Development of a virtual flood experience system and its suitability as a flood risk communication tool

Masatoshi Denda

Masakazu Fujikane

Preventing late evacuation has been a challenge in Japan's flood management. Our hypothesis is that people often have little idea about how dangerous a flood can be and thus do not understand the importance of early evacuation. To help them realize the possible risk of flooding, we developed the Virtual Flood Experience System (VFES) using virtual reality (VR) technology. This tool is also expected to improve flood risk communication and flood evacuation training. We evaluated the realness of a virtual flood reconstructed by VFES and conducted an evacuation behaviour experiment using VFES. This paper reports an overview of VFES, including the results of the evaluation and experiment conducted in Aga Town, Niigata Prefecture, Japan. The results confirmed that VFES can successfully reconstruct the flood conditions caused by Typhoon No.19 in 2019 and quantitatively record the difference in evacuation behaviour and the time required to select appropriate evacuation behaviour between individuals with knowledge of flood risk and those without, indicating its high potential as an effective risk commutation tool capable of providing virtual flood experiences and assisting behavioural psychology experiments.

- Article

(4912 KB) - Full-text XML

- BibTeX

- EndNote

Preventing late evacuation has been a challenge in Japan's flood management. Evacuation training can be more practical if participants can imagine what flooding is really like, but it is often hard for them to do so. However, it becomes possible with virtual reality (VR) technology, which can provide a vivid image of flooding (Calil et al., 2021).

Fujimi and Fujimura (2020) studied how people would feel and act during flooding by creating a virtual flood situation in a virtual town. They found that a scene indicating the immediate danger of flooding is an important clue people use to start evacuation and that other people's behaviour, such as evacuation, also affects one's decisions about what to do during flooding. Though addressing some important aspects of human behaviour, the study did not quantitatively create a flood situation using numerical flood simulation.

To further investigate why people are sometimes too late to evacuate, flooding must be quantitatively reproduced using numerical simulation. If a town and flooding are realistically reproduced, people can virtually experience a flood situation that is likely to happen in their residential areas, and thus can more directly feel the level of danger they could be exposed to. To this end, we developed the Virtual Flood Experience System (VFES) using 3D spatial information, hydrologic and hydraulic numerical simulation, and VR technology. In this paper, we outline the system, including its flood reproduction ability and experiments of virtual flood evacuation.

2.1 System components

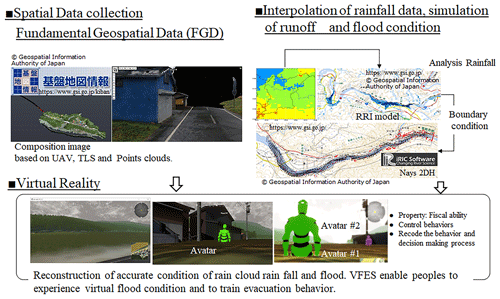

VFES is composed of three subsystems (Fig. 1): (1) the spatial information subsystem, (2) the hydrologic and hydraulic subsystem, and (3) the virtual reality integration subsystem.

2.2 Spatial information subsystem

The spatial information subsystem creates the basic spatial information for VFES using the Fundamental Geospatial Data (FGD) provided by the Geospatial Information Authority of Japan (GSI). FGD includes terrain data (5 m-grid digital elevation model), the benchmarks of field measurement, shorelines, administrative boundaries and present points, road edges, and building perimeters, and can be used to create the base map of the study site.

Although FGD provides enough information to develop the base map of the study site, it does not guarantee sufficient accuracy needed to produce a precise map of the study site. For example, FGD indicates the locations of some buildings slightly off from their actual positions. It does not provide accurate vegetation information about plants and trees, either.

FGD also does not provide enough data to accurately illustrate roads running through tunnels three-dimensionally. If VFES uses FGD's road data without any additional processing, roads in tunnels will be illustrated as if they were pasted on mountain slopes. Therefore, highly accurate field surveys are necessary to reconstruct such structural features with high accuracy. VFES can import point cloud data collected by unmanned aerial vehicles (UAVs) and terrain laser scanners (TLSs) to accurately express roads in tunnels and vegetation on a map.

Although point clouds are powerful field measurement data, they also do not provide enough information to accurately reconstruct the planes, shapes, and textures of buildings. For their accurate reconstruction, 3D CAD is used to improve the buildings' shapes, and UAV-based aerial photographs are used to modify their textures. In this study, we also employ 3D CAD and photogrammetry for these purposes.

2.3 Hydrologic and hydraulic subsystem

The hydrologic and hydraulic subsystem are composed of the hydrologic and hydraulic units. The hydrologic unit simulates runoff and sets the input condition of the upper boundary discharge for 2D hydraulic simulation. Rainfall data observed at rain-gauge stations are interpolated and used to calculate the distribution of rainfall data, which are input to the Rainfall-Runoff-Inundation (RRI) model. The RRI model simulates basin-scale runoff and are calibrated with flow discharge. iRIC (Solver: Nays2DH), commonly used in Japan as a numerical simulation tool for river flow, flood prediction, and other similar purposes, is employed to perform 2D hydraulic simulations. Spatial information, such as topography and buildings, is imported into iRIC for 2D hydraulic simulation.

2.4 Virtual reality integration subsystem

VFES uses VR software to integrate spatial data reconstructed by the spatial information subsystem and flood conditions simulated by the hydrologic and hydraulic subsystem. Unity or Unreal Engine (UE), famous game engines, are mainly utilized for this purpose. These types of VR software have the powerful ability to reconstruct flood conditions with a high level of reality. Game engines enable users to apply virtual reality effects (VRE) to sky, light, rain, flood, and other features. People feel fear and sense the danger of flooding not only from how the surrounding landscape changes but also from lightning and thunder, for example. VRE can help create cloudy and rainy skies and turbulences on a smooth water surface using numerical simulation data.

VR software is also excellent at providing virtual experiences. The software allows users to create an avatar, which is their virtual self in virtual space. It lets people create avatars with different features and capabilities and control them as they like. For example, a healthy young adult with no physical problems can create an avatar who needs support in evacuation during a flood event and experience possible problems they may face in an emergency.

VFES can be an effective tool to overcome late evacuation. The system can quantitatively reconstruct dangerous flooding conditions and provide “lesson” VR video clips created based on real flood experiences, from which people without flood experiences can learn about flooding and evacuation. If people learn through such clips about how dangerous flooding can be, what actions they should take, and how critical early evacuation is to avoid possible dangers, even those without flood experiences should be able to act appropriately and decide to evacuate early.

To confirm this possibility, we conducted a feasibility study on the potential of VFES as a risk communication tool for flood risk reduction. We first applied VFES to an area in eastern Japan that suffered severe flood damage when Typhoon No.19 hit the region in 2019. Second, we evaluated the simulated flood conditions for the degree of realness by comparing them with the actual conditions. Third, we interviewed local people living in the flood-affected area about lessons they learned from the flood and actions they now consider appropriate and produced lesson VR video clips. Finally, we conducted an experiment with two groups of subjects by reference to methods of psychological experiments to investigate what decision they would make in a virtual flood situation.

3.1 Study site

We selected the Agano River basin and Aga Town as the study area. The Agano River, located in central Japan, is 210 km long with a basin area of 7710 m2. The Agano River is the second biggest river in Japan in terms of discharge, and the basin is considered one of the leading hydropower generation areas in the country.

The rich discharge supports abundant harvests in the area. For the same reason, large-scale flooding has repeatedly occurred in the Agano River basin in the past 30 years: the largest in 1988 and one of a similar magnitude in 2019 both caused severe damage to local communities along the river. We specifically selected Sanekawajima, a district severely affected by both events, as the study area in consultation with Aga Town officials.

3.2 Reproduction of the past flood condition using VFES and river engineering

We conducted field surveys in 2019 and 2020 to collect the spatial data of Aga Town. The surveys using UAV and TLS were conducted in 2019. Photogrammetry was conducted in 2020. The RRI model simulated the flood conditions in 2019 using rainfall data in October 2019. Two-dimensional flood simulations were conducted using RRI-model runoff results as the boundary condition. The spatial data and the flood simulation data were integrated using Unreal Engine (UE) and the field survey results.

3.3 Hearing of public comments

We had a meeting with Sanekawajima residents on 12 December 2021, to evaluate the realness of images created by VFES and hear their life-threatening stories related to the study floods.

3.4 Creation of Lesson VR

We modified VR images based on the stories collected from the district's residents to improve the level of realness that the images convey. Also, based on their stories, we created VR video clips that contain the lessons the residents learned from their experiences. Using UE's movie camera function, we produced a number of flood images and edited them according to the scenarios prepared for the lesson VR video clips.

3.5 Effect of lesson VR video clips on evacuation behaviour

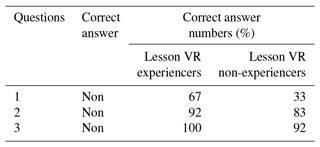

To evaluate whether lesson VR video clips improve evacuation behaviour, we conducted a virtual flood evacuation experiment using VFES with two groups of subjects by reference to psychological experiments. One group watched lesson VR video clips #1 to #3 (n=12), while the other watched none (n=12). The two groups answered questions about appropriate evacuation behaviour. The answers were analysed to find out the difference the correct answers number between the groups and the time required to answer each question.

4.1 Evaluation of reproduction accuracy of the past flood condition using VFES

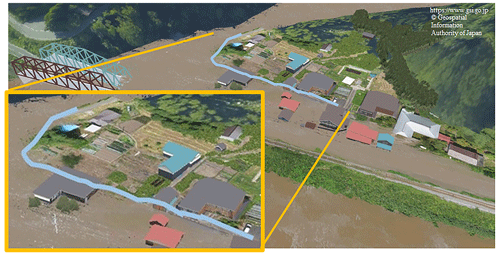

Figure 2 shows a bird's-eye view of a past flood scene reproduced using VFES. The blue line in the figure indicates the water edge line observed by Niigata Prefecture. The water edge line estimated by VFES matches the observed water edge line within a few meters. The comparison shows that VFES accurately reproduces the flood scenes. Utilizing 3D spatial data and hydrologic and hydraulic simulation technology, VFES successfully expresses the landscape and flood conditions in Sanekawajima District, including water levels and waves. Although the houses are not reproduced in detail to protect the residents' privacy, the distant landscape is expressed accurately, including house shapes, ridgelines, and the textures of structures and mountains. The results confirmed that VFES is fully capable of reproducing flood situations accurately enough to help people realize the danger of flooding.

4.2 Creating of lesson VR

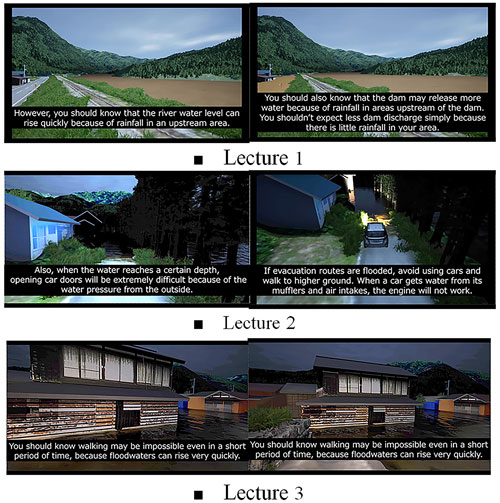

Figure 3 shows the storyboards of the lesson VR video clips. We selected three topics from the stories we heard from Sanakawajima residents.

Lesson VR video clip #1 is designed to convey the message, “Experience sometimes fails you,” based on a resident's story that flooding occurred after a dam discharge. The main idea of lesson VR video clip #2 is “Evacuation by car can be life-threatening”, derived from another story about a car caught in flooding and soon submerged when evacuating at night. This clip primarily focuses on the possible danger of evacuation in a vehicle but also warns about night-time evacuation when people do not have good visibility. Lesson VR video clip #3's message is “Do not return home for things you left”, which is from an episode in which a resident went back home to get something he forgot to take with him when he evacuated, only to be exposed to violent flooding. In addition to the message, it also points out that floodwaters can pose a life-threatening risk when they reach a certain depth.

4.3 Influence of lesson VR knowledges on evacuation behaviour

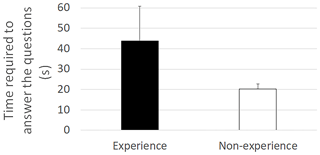

Table 1 and Fig. 4 compare the responses and time required to answer the questions between two groups of subjects, one of whom watched the lesson video clips while the other did not. Significantly more subjects provided correctly in the video-clip group than in the no-video-clip group in case 1. The difference between the two groups in terms of cases 2 and 3 is not very clear. This tendency is also the case with the comparison between the two groups in the time they took to answer the questions.

The work indicates the high potential of VFES as a risk communication tool. In order to achieve effective risk communication, it is crucial to share and communicate experiences and knowledge among people in a community.

In previous research and activities on disaster risk communication, flood experiences and knowledge are shared mostly orally with some photos from survivors. This approach has been found limited in communicating experiences and knowledge to other people or passing them own from generation to generation. However, VFES can revolutionarily change this situation since it quantitatively reconstructs both near and distant landscapes and creates flood conditions using VR images for locations where actual flood conditions were not observed during the event. In addition, VFES can dynamically create and show scenes in a flood situation from various viewpoints. The VFES-driven video clips can provide innovative, original information. Oral storytelling is not exactly suitable for describing a situation in detail, thus failing to elaborate on the actual conditions of flooding and conveying the real danger of the situation. This weakness often prevents people with no flood experience from having a clear idea about how dangerous flooding can be. VFES can help overcome this challenge.

Our research has also demonstrated the promising potential of VFES as a research tool. Fujimi and Fujimura (2020) indicated the potential roles that VR can play in providing virtual flood experiences and assisting experiments. As the research progresses, we have found more research topics in recognition of the potential of VR.

The following summarizes our research hypotheses at this moment, though it is still at a very early stage.

First, we hypothesize that visual information is highly likely to communicate the danger of flooding to people. The more visual information people have about possible dangers, the more likely they are to sense them and take appropriate actions. In cases 2 and 3 of our experiment, there was not much difference between the two groups of subjects in the percentage of answering correct answers and the time required to answer the questions. This is probably because both cases provide high-quality visual information by successfully reproducing the scenes that warn people about the danger of flooding at night.

Our second hypothesis is that knowledge matters when people have no access to visual information, such as being unable to see possible hazards. In case 1 of our experiment, in which the subjects could not see how critical the flood situation was, they were asked if they would go to the river to check the situation and decide whether to evacuate. The subjects who watched the lesson video clip were far more likely to choose the answer “No, I won't.” than those who did not. This is probably because they chose the answer based on the knowledge they learned from the video clip. However, the video-clip group took longer to answer the question than the no-video-clip group. We assume that they still wondered if they should go and check the river while knowing from the video clip that it is something they should not do.

Our research has just begun, and we will continue conducting more experiments to test these hypotheses.

This study developed the “Virtual Flood Experiment System (VFES)” using virtual reality (VR). We evaluated the realness of a virtual flood reconstructed by VFES and conducted evacuation behaviour experiments using VFES. The results confirmed that VFES can reconstruct high-quality flood conditions and quantitatively record the difference in evacuation behaviour, indicating its high potential as an effective risk commutation tool.

The code is not publicly accessible. VFES is currently under development.

The data is not publicly accessible. These data are about the psychological processes of individual subjects and must be treated with extra care in terms of research ethics.

Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Resources, Validation, Visualization, Writing – original draft preparation, Writing – review & editing: MD, Supervision: MF.

The contact author has declared that neither of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This article is part of the special issue “ICFM9 – River Basin Disaster Resilience and Sustainability by All”. It is a result of The 9th International Conference on Flood Management, Tsukuba, Japan, 18–22 February 2023.

We would like to thank the local government staff and residents in the Aga town for their tremendous contribution and cooperation during the field and interview surveys.

This paper was edited by Svenja Fischer and reviewed by two anonymous referees.

Calil, J., Fauville, G., Queiroz, A., Leo, K., Mann, A., Wise-West, T., Salvatore, P., and Bailenson, J.: Using Virtual Reality in Sea Level Rise Planning and Community Engagement – An Overview, Water-Basel, 13, 1142, https://doi.org/10.3390/w13091142, 2021.

Fujimi, T. and Fujimura, K.: Testing public interventions for flash flood evacuation through environmental and social cues: The merit of virtual reality experiments, Int. J. Disaster Risk Reduct., 50, 101690, https://doi.org/10.1016/j.ijdrr.2020.101690, 2020.